ETC1010: Data Modelling and Computing

Lecture 8B: Text analysis and linear models

Dr. Nicholas Tierney & Professor Di Cook

EBS, Monash U.

2019-09-20

recap

- tidying up text

- use

genius_album()to download lyrics of songs - stop_words - (I, am, be, the, this, what, we, myself)

Overview

- interactions in modelling

- tidy text

Adding interactions to the model

Remember linear regression?

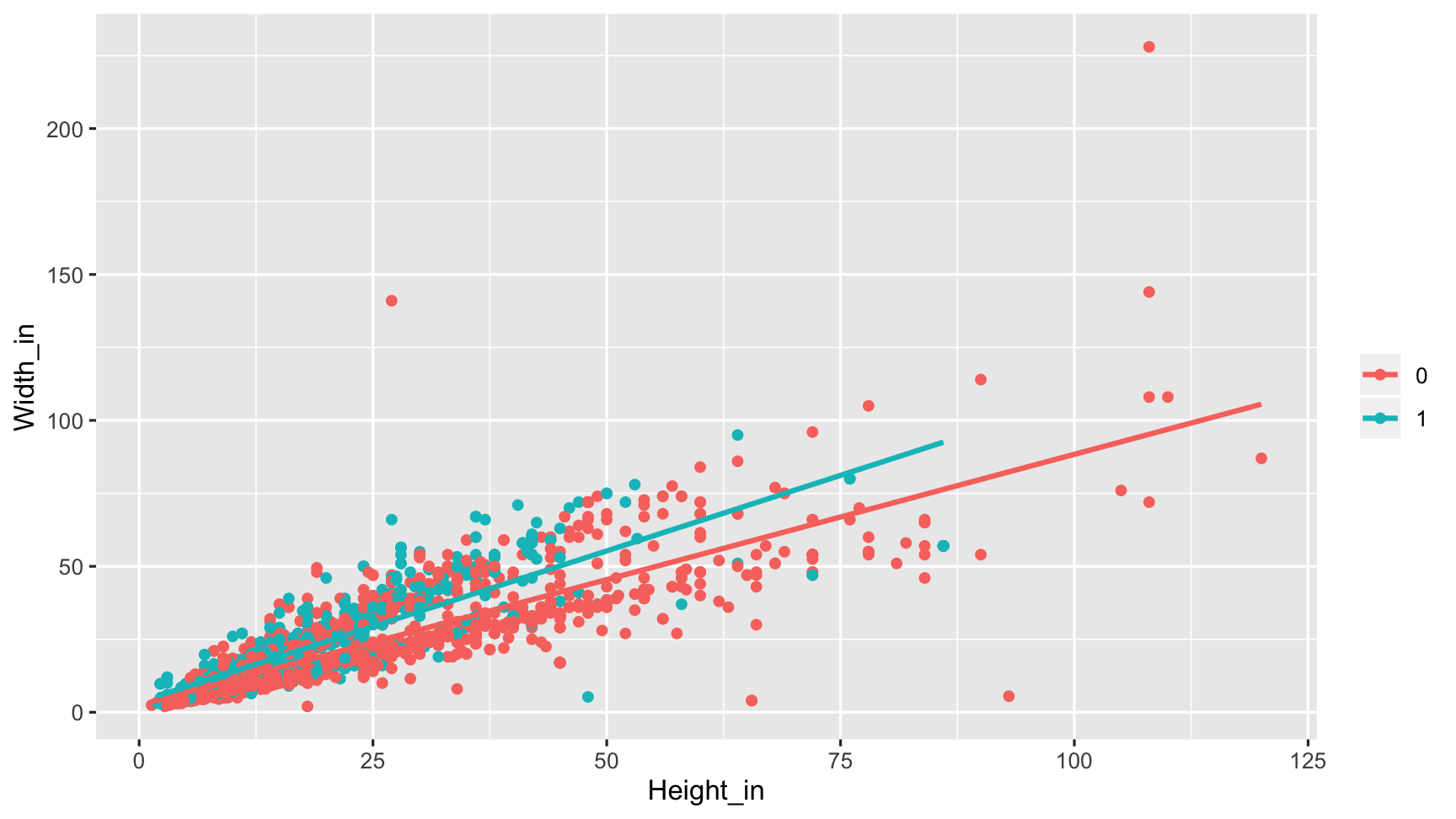

Interaction between quantitative and categorical variables

- An interaction term is needed in a model if the linear relationship is different for the response vs quantitative variable for different levels of the categorical variable.

- That is, a different slope needs to be used/estimated for each level.

Interaction between quantitative and categorical variables

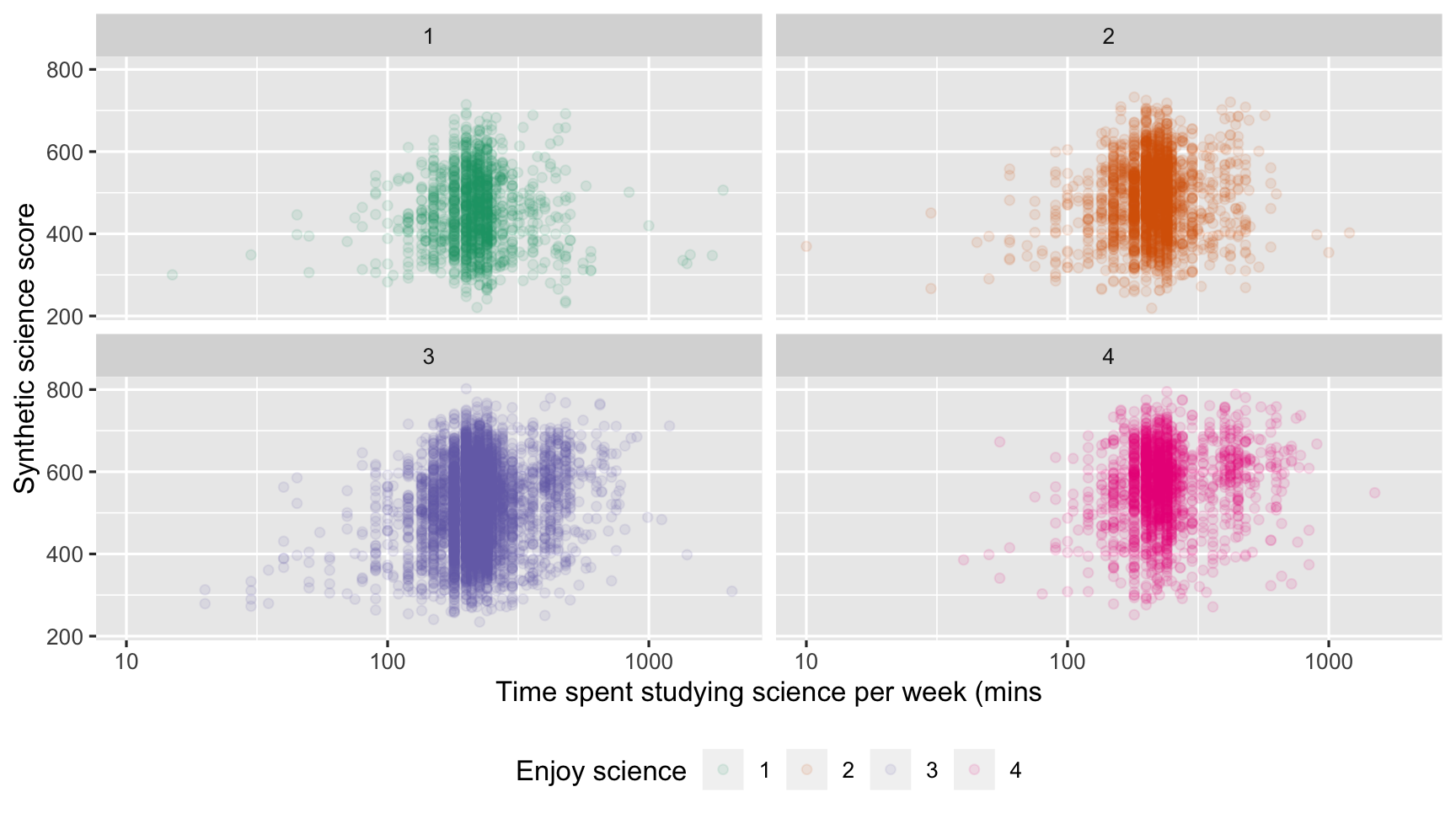

- Let's take a look at how this works for the 2015 OECD PISA data.

- The question to be answered is whether more time spent studying science is associated with higher science scores, and how this varies with enjoyment of science.

PISA data

pisa_au <- read_csv("data/pisa_au.csv")pisa_au## # A tibble: 14,530 x 44## state schtype yr birthmonth birthyr gender desk room computer internet solarpanels## <chr> <chr> <chr> <dbl> <dbl> <chr> <dbl> <dbl> <dbl> <dbl> <chr> ## 1 QLD Gov Y10 10 1999 female 1 2 1 1 0036001 ## 2 QLD Gov Y10 2 2000 female 2 1 1 1 0036002 ## 3 QLD Gov Y10 3 2000 female 1 1 1 1 0036002 ## 4 QLD Gov Y10 7 1999 female 1 1 1 1 0036001 ## 5 QLD Gov Y10 8 1999 male 1 1 1 1 0036001 ## 6 QLD Gov Y10 3 2000 male 1 1 1 1 0036001 ## 7 QLD Gov Y10 3 2000 male 1 1 1 1 0036001 ## 8 QLD Gov Y10 1 2000 male 1 1 2 1 0036001 ## 9 QLD Gov Y10 6 1999 female 1 1 1 1 0036001 ## 10 QLD Gov Y10 9 1999 female 1 1 2 1 0036002 ## # … with 14,520 more rows, and 33 more variables: tvs <dbl>, cars <dbl>,## # music_instr <dbl>, books <dbl>, birthcnt <dbl>, mother_birthcnt <dbl>,## # father_birthcnt <dbl>, test_anxiety <dbl>, ambitious <dbl>, prefer_team <dbl>,## # make_friends_easy <dbl>, tardy <dbl>, science_fun <dbl>, breakfast <dbl>,## # work_pay <dbl>, sport <dbl>, internet_use <dbl>, install_software <dbl>,## # outhours_study <dbl>, math_time <dbl>, read_time <dbl>, science_time <dbl>,## # belong <dbl>, anxtest <dbl>, motivat <dbl>, language <dbl>, home_edres <dbl>,## # home_poss <dbl>, wealth <dbl>, stuweight <dbl>, math <dbl>, science <dbl>, read <dbl>PISA data

pisa_au %>% select(science_time, science, science_fun)## # A tibble: 14,530 x 3## science_time science science_fun## <dbl> <dbl> <dbl>## 1 210 590. NA## 2 165 557. 3## 3 210 569. 2## 4 210 529. 4## 5 210 504. 3## 6 210 473. NA## 7 135 496. NA## 8 280 336. 1## 9 210 565. 2## 10 0 605. 2## # … with 14,520 more rowsInteraction between quantitative and categorical variables

There are two possible models:

yi=β0+β1xi1+β2xi2+εi (Model 1)

yi=β0+β1xi1+β2xi2+β3xi1∗xi2+εi (Model 2)

- y= science score

- x1= science study time

- x2= science enjoyment.

Model 2 has an interaction term.

This means that the slope will be allowed to vary for the different levels of the categorical variables, science_fun.

Note on modelling

Note: Ordered factors are treated as "numeric" in the default model fit, so we should convert science_fun to be an unordered categorical variable. Also, science_time is heavily skewed so should be transformed.

pisa_au_science_log10 <- pisa_au_science %>% mutate(log_science_time = log10(science_time)) %>% mutate(science_fun_c = factor(science_fun, ordered = FALSE))mod1 <- lm(science ~ log_science_time + science_fun_c, data = pisa_au_science_log10, weights = stuweight)mod2 <- lm(science ~ log_science_time * science_fun_c, data = pisa_au_science_log10, weights = stuweight)tidy(mod1)## # A tibble: 5 x 5## term estimate std.error statistic p.value## <chr> <dbl> <dbl> <dbl> <dbl>## 1 (Intercept) 268. 14.6 18.3 1.26e- 73## 2 log_science_time 83.8 6.18 13.6 1.31e- 41## 3 science_fun_c2 31.8 3.15 10.1 9.28e- 24## 4 science_fun_c3 63.1 2.80 22.5 7.17e-110## 5 science_fun_c4 104. 3.25 32.1 3.00e-216tidy(mod2)## # A tibble: 8 x 5## term estimate std.error statistic p.value## <chr> <dbl> <dbl> <dbl> <dbl>## 1 (Intercept) 463. 43.5 10.6 2.37e-26## 2 log_science_time -0.00400 18.6 -0.000215 10.00e- 1## 3 science_fun_c2 -152. 55.5 -2.74 6.18e- 3## 4 science_fun_c3 -167. 48.1 -3.46 5.33e- 4## 5 science_fun_c4 -122. 53.6 -2.29 2.23e- 2## 6 log_science_time:science_fun_c2 78.6 23.8 3.31 9.33e- 4## 7 log_science_time:science_fun_c3 98.4 20.6 4.78 1.74e- 6## 8 log_science_time:science_fun_c4 96.9 22.8 4.25 2.11e- 5Five minute challenge

- Write out the equations for both models. (Ignore the log transformation.)

- Make a hand sketch of both models.

Which is the better model?

glance(mod1)## # A tibble: 1 x 11## r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC deviance## <dbl> <dbl> <dbl> <dbl> <dbl> <int> <dbl> <dbl> <dbl> <dbl>## 1 0.123 0.123 387. 385. 6.87e-311 5 -66706. 1.33e5 1.33e5 1.64e9## # … with 1 more variable: df.residual <int>glance(mod2)## # A tibble: 1 x 11## r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC deviance## <dbl> <dbl> <dbl> <dbl> <dbl> <int> <dbl> <dbl> <dbl> <dbl>## 1 0.125 0.125 387. 224. 2.62e-312 8 -66694. 1.33e5 1.33e5 1.64e9## # … with 1 more variable: df.residual <int>😲 they are both pretty bad! The interaction model (mod2) is slightly better but its really not.

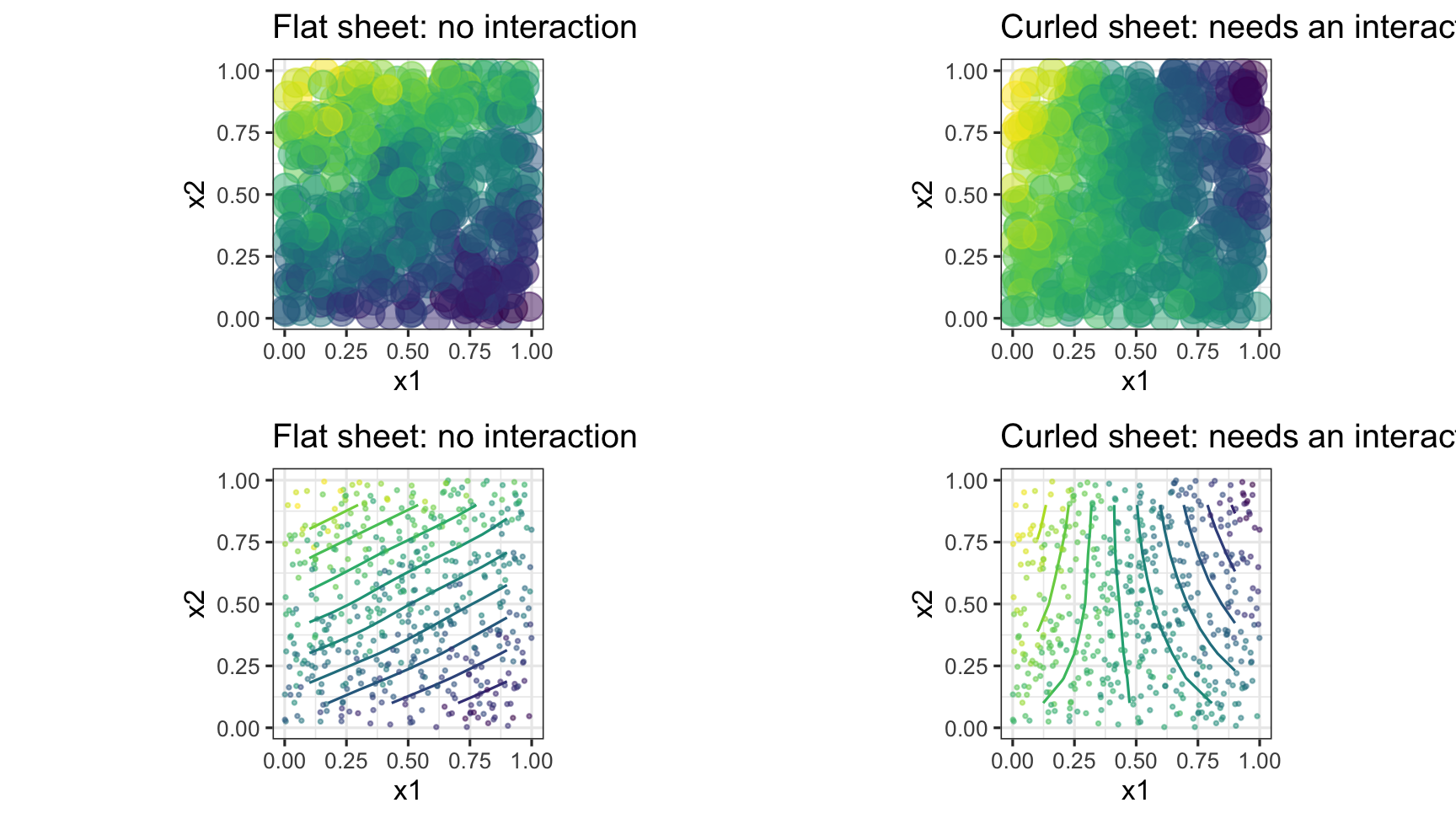

Interaction between quantitative variables

- Interactions for two quantitative variables in a model, can be thought of as allowing the paper sheet (model) to curl.

Five minute challenge

Using the PISA data: How does science score relate to text anxiety and gender?

- Make a plot of science by anxtest, coloured by gender. Does it look like an interaction term might be necessary?

- Fit the model with

sciencescore as the response andgenderandanxtest. - Try an interaction between gender and anxtest.

- Which is the better model?

Model building, Goal:

The simplest model possible that provides similar predictive accuracy to most complex model.

Model building, Approach:

- Start simply, fit main effects models (single best variable, adding several more variables independently) and try to understand the effect that each has in the model.

- Explore transformations with the aim to build a stable foundation of explanatory variables for the model.

- Check model diagnostics, residual plots.

- Explore two variable interactions, and understand effect on model.

- Explore three variable interactions.

- Use model goodness of fit to help decide on final. There may be more than one model that are almost equally as good.

Some asides on model building

- Ideally, values of explanatory variables cover all possible combinations in their domain.

- There should not be any association between explanatory variables.

- If there is, then the there is more uncertainty in the parameter estimates.

- Its like building a table with only two legs, that table would be a bit wobbly, and unstable.

Some asides on model building

- A work around is to first regress one explanatory variable on the other, and add the residuals from this fit to the model, instead of the original variable.

- That is, suppose X1,X2 are strongly linearly associated, then model X2∼b0+b1X1+e, and use e (call it X∗2) in the model instead of X2.

- You would then only be using the part of X2 that is not related to X1 to expand the model.

- This approach can be used for multiple explanatory variables that are associated.

Your Turn:

Build the best model you can for science scoreby exploring these variables:

- math score

- reading score

- tvs

- books

- breakfast

Feel free to choose others. (Code provided in exercise is just a sample, and needs to be modified.)

Sentiment analysis

Sentiment analysis tags words or phrases with an emotion, and summarises these, often as the positive or negative state, over a body of text.

Sentiment analysis: examples

- Examining effect of emotional state in twitter posts

- Determining public reactions to government policy, or new product releases

- Trying to make money in the stock market by modeling social media posts on listed companies

- Evaluating product reviews on Amazon, restaurants on zomato, or travel options on TripAdvisor

emotion

What emotion do these words elicit in you?

- summer

- hot chips

- hug

- lose

- stolen

- smile

Different sources of sentiment

- The

nrclexicon categorizes words in a binary fashion ("yes"/"no") into categories of positive, negative, anger, anticipation, disgust, fear, joy, sadness, surprise, and trust. - The

binglexicon categorizes words in a binary fashion into positive and negative categories. - The

AFINNlexicon assigns words with a score that runs between -5 and 5, with negative scores indicating negative sentiment and positive scores indicating positive sentiment.

Different sources of sentiment

get_sentiments("afinn")## # A tibble: 2,477 x 2## word value## <chr> <dbl>## 1 abandon -2## 2 abandoned -2## 3 abandons -2## 4 abducted -2## 5 abduction -2## 6 abductions -2## 7 abhor -3## 8 abhorred -3## 9 abhorrent -3## 10 abhors -3## # … with 2,467 more rowsSentiment analysis

- Once you have a bag of words, you need to join the sentiments dictionary to the words data.

- Particularly the lexicon

nrchas multiple tags per word, so you may need to use an "inner_join". inner_join()returns all rows from x where there are matching values in y, and all columns from x and y.- If there are multiple matches between x and y, all combination of the matches are returned.

Exploring sentiment in Jane Austen

janeaustenr package contains the full texts, ready for analysis for for Jane Austen's 6 completed novels:

- "Sense and Sensibility"

- "Pride and Prejudice"

- "Mansfield Park"

- "Emma"

- "Northanger Abbey"

- "Persuasion"

Exploring sentiment in Jane Austen

library(janeaustenr)library(stringr)tidy_books <- austen_books() %>% group_by(book) %>% mutate(linenumber = row_number(), chapter = cumsum(str_detect(text, regex("^chapter [\\divxlc]", ignore_case = TRUE)))) %>% ungroup() %>% unnest_tokens(word, text)Exploring sentiment in Jane Austen

tidy_books## # A tibble: 725,055 x 4## book linenumber chapter word ## <fct> <int> <int> <chr> ## 1 Sense & Sensibility 1 0 sense ## 2 Sense & Sensibility 1 0 and ## 3 Sense & Sensibility 1 0 sensibility## 4 Sense & Sensibility 3 0 by ## 5 Sense & Sensibility 3 0 jane ## 6 Sense & Sensibility 3 0 austen ## 7 Sense & Sensibility 5 0 1811 ## 8 Sense & Sensibility 10 1 chapter ## 9 Sense & Sensibility 10 1 1 ## 10 Sense & Sensibility 13 1 the ## # … with 725,045 more rowsCount joyful words in "Emma"

nrc_joy <- get_sentiments("nrc") %>% filter(sentiment == "joy")tidy_books %>% filter(book == "Emma") %>% inner_join(nrc_joy) %>% count(word, sort = TRUE)## # A tibble: 303 x 2## word n## <chr> <int>## 1 good 359## 2 young 192## 3 friend 166## 4 hope 143## 5 happy 125## 6 love 117## 7 deal 92## 8 found 92## 9 present 89## 10 kind 82## # … with 293 more rowsCount joyful words in "Emma"

"Good" is the most common joyful word, followed by "young", "friend", "hope".

All make sense until you see "found".

Is "found" a joyful word?

Your turn: go to rstudio.cloud

- What are the most common "anger" words used in Emma?

- What are the most common "surprise" words used in Emma?

Comparing lexicons

- All of the lexicons have a measure of positive or negative.

- We can tag the words in Emma by each lexicon, and see if they agree.

nrc_pn <- get_sentiments("nrc") %>% filter(sentiment %in% c("positive", "negative"))emma_nrc <- tidy_books %>% filter(book == "Emma") %>% inner_join(nrc_pn)emma_bing <- tidy_books %>% filter(book == "Emma") %>% inner_join(get_sentiments("bing")) emma_afinn <- tidy_books %>% filter(book == "Emma") %>% inner_join(get_sentiments("afinn"))Comparing lexicons

emma_nrc## # A tibble: 13,944 x 5## book linenumber chapter word sentiment## <fct> <int> <int> <chr> <chr> ## 1 Emma 15 1 clever positive ## 2 Emma 16 1 happy positive ## 3 Emma 16 1 blessings positive ## 4 Emma 17 1 existence positive ## 5 Emma 18 1 distress negative ## 6 Emma 21 1 marriage positive ## 7 Emma 22 1 mistress negative ## 8 Emma 22 1 mother negative ## 9 Emma 22 1 mother positive ## 10 Emma 23 1 indistinct negative ## # … with 13,934 more rowsComparing lexicons

emma_afinn## # A tibble: 10,901 x 5## book linenumber chapter word value## <fct> <int> <int> <chr> <dbl>## 1 Emma 15 1 clever 2## 2 Emma 15 1 rich 2## 3 Emma 15 1 comfortable 2## 4 Emma 16 1 happy 3## 5 Emma 16 1 best 3## 6 Emma 18 1 distress -2## 7 Emma 20 1 affectionate 3## 8 Emma 22 1 died -3## 9 Emma 24 1 excellent 3## 10 Emma 25 1 fallen -2## # … with 10,891 more rowsComparing lexicons

emma_nrc %>% count(sentiment) %>% mutate(n / sum(n))## # A tibble: 2 x 3## sentiment n `n/sum(n)`## <chr> <int> <dbl>## 1 negative 4473 0.321## 2 positive 9471 0.679emma_bing %>% count(sentiment) %>% mutate(n / sum(n))## # A tibble: 2 x 3## sentiment n `n/sum(n)`## <chr> <int> <dbl>## 1 negative 4809 0.402## 2 positive 7157 0.598Comparing lexicons

emma_afinn %>% mutate(sentiment = ifelse(value > 0, "positive", "negative")) %>% count(sentiment) %>% mutate(n / sum(n))## # A tibble: 2 x 3## sentiment n `n/sum(n)`## <chr> <int> <dbl>## 1 negative 4429 0.406## 2 positive 6472 0.594Your turn: Exercise 2

- Using your choice of lexicon (nrc, bing, or afinn) compute the proportion of positive words in each of Austen's books.

- Which book is the most positive? negative?

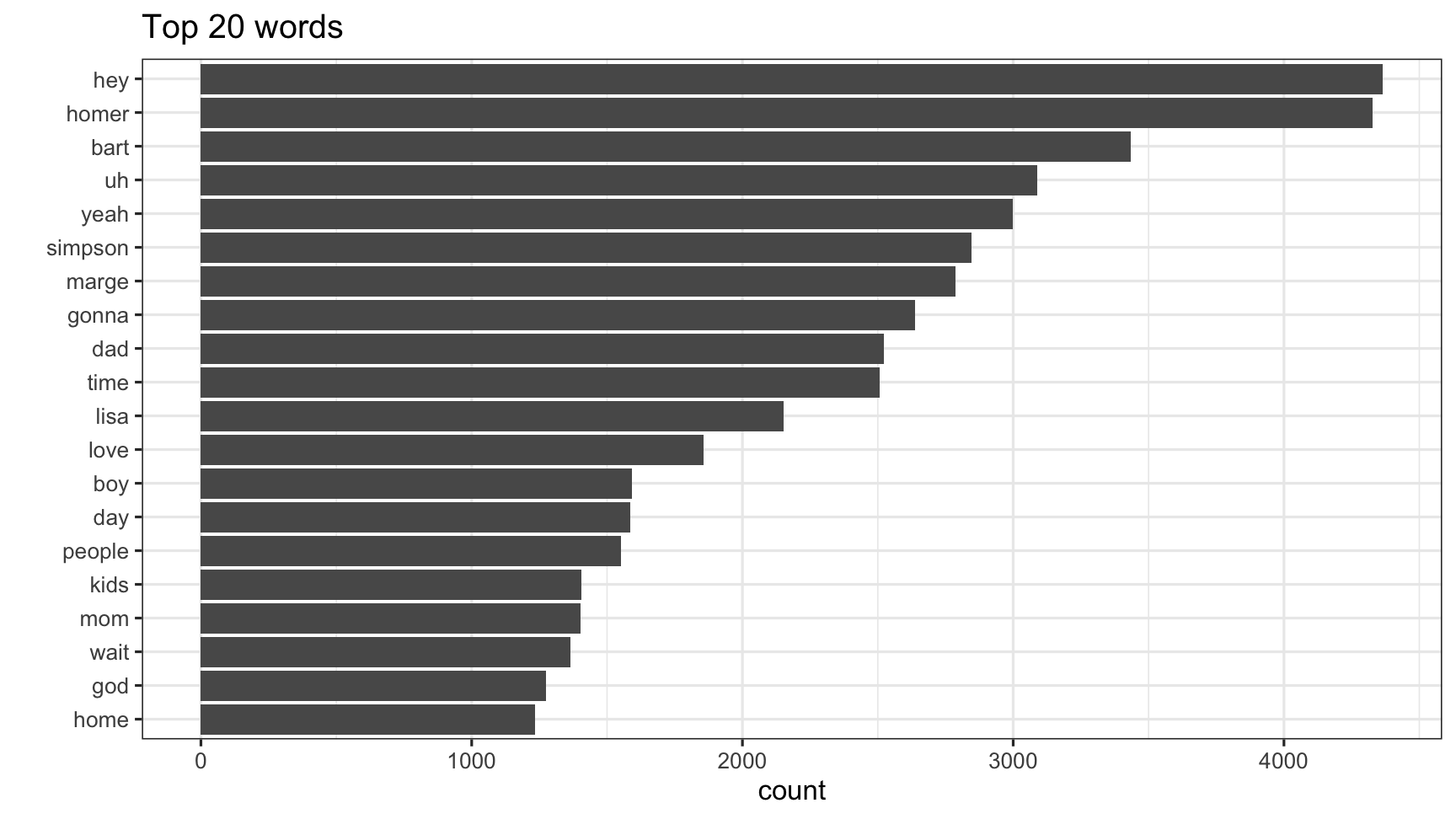

Example: Simpsons

Data from the popular animated TV series, The Simpsons, has been made available on kaggle.

simpsons_script_lines.csv: Contains the text spoken during each episode (including details about which character said it and where)simpsons_characters.csv: Contains character names and a character id

The Simpsons

scripts <- read_csv("data/simpsons_script_lines.csv")chs <- read_csv("data/simpsons_characters.csv")sc <- left_join(scripts, chs, by = c("character_id" = "id"))sc## # A tibble: 157,462 x 16## id episode_id number raw_text timestamp_in_ms speaking_line character_id location_id## <dbl> <dbl> <dbl> <chr> <dbl> <lgl> <dbl> <dbl>## 1 9549 32 209 Miss Ho… 848000 TRUE 464 3## 2 9550 32 210 Lisa Si… 856000 TRUE 9 3## 3 9551 32 211 Miss Ho… 856000 TRUE 464 3## 4 9552 32 212 Lisa Si… 864000 TRUE 9 3## 5 9553 32 213 Edna Kr… 864000 TRUE 40 3## 6 9554 32 214 Martin … 877000 TRUE 38 3## 7 9555 32 215 Edna Kr… 881000 TRUE 40 3## 8 9556 32 216 Bart Si… 882000 TRUE 8 3## 9 9557 32 217 (Apartm… 889000 FALSE NA 374## 10 9558 32 218 Lisa Si… 889000 TRUE 9 374## # … with 157,452 more rows, and 8 more variables: raw_character_text <chr>,## # raw_location_text <chr>, spoken_words <chr>, normalized_text <chr>, word_count <chr>,## # name <chr>, normalized_name <chr>, gender <chr>count the number of times a character speaks

sc %>% count(name, sort = TRUE)## # A tibble: 6,143 x 2## name n## <chr> <int>## 1 Homer Simpson 29945## 2 <NA> 19661## 3 Marge Simpson 14192## 4 Bart Simpson 13894## 5 Lisa Simpson 11573## 6 C. Montgomery Burns 3196## 7 Moe Szyslak 2853## 8 Seymour Skinner 2437## 9 Ned Flanders 2139## 10 Grampa Simpson 1952## # … with 6,133 more rowsmissing name?

sc %>% filter(is.na(name))## # A tibble: 19,661 x 16## id episode_id number raw_text timestamp_in_ms speaking_line character_id location_id## <dbl> <dbl> <dbl> <chr> <dbl> <lgl> <dbl> <dbl>## 1 9557 32 217 (Apartm… 889000 FALSE NA 374## 2 9565 32 225 (Spring… 918000 FALSE NA 3## 3 75766 263 106 (Moe's … 497000 FALSE NA 15## 4 9583 32 243 (Train … 960000 FALSE NA 375## 5 9604 32 264 (Simpso… 1070000 FALSE NA 5## 6 9655 33 0 (Simpso… 84000 FALSE NA 5## 7 9685 33 30 (Simpso… 177000 FALSE NA 5## 8 9686 33 31 (Simpso… 177000 FALSE NA 5## 9 9727 33 72 (Simpso… 349000 FALSE NA 151## 10 9729 33 74 (Simpso… 355000 FALSE NA 5## # … with 19,651 more rows, and 8 more variables: raw_character_text <chr>,## # raw_location_text <chr>, spoken_words <chr>, normalized_text <chr>, word_count <chr>,## # name <chr>, normalized_name <chr>, gender <chr>Simpsons Pre-process the text

sc %>% unnest_tokens(output = word, input = spoken_words)## # A tibble: 1,355,370 x 16## id episode_id number raw_text timestamp_in_ms speaking_line character_id location_id## <dbl> <dbl> <dbl> <chr> <dbl> <lgl> <dbl> <dbl>## 1 9549 32 209 Miss Ho… 848000 TRUE 464 3## 2 9549 32 209 Miss Ho… 848000 TRUE 464 3## 3 9549 32 209 Miss Ho… 848000 TRUE 464 3## 4 9549 32 209 Miss Ho… 848000 TRUE 464 3## 5 9549 32 209 Miss Ho… 848000 TRUE 464 3## 6 9549 32 209 Miss Ho… 848000 TRUE 464 3## 7 9549 32 209 Miss Ho… 848000 TRUE 464 3## 8 9549 32 209 Miss Ho… 848000 TRUE 464 3## 9 9549 32 209 Miss Ho… 848000 TRUE 464 3## 10 9549 32 209 Miss Ho… 848000 TRUE 464 3## # … with 1,355,360 more rows, and 8 more variables: raw_character_text <chr>,## # raw_location_text <chr>, normalized_text <chr>, word_count <chr>, name <chr>,## # normalized_name <chr>, gender <chr>, word <chr>Simpsons Pre-process the text

sc %>% unnest_tokens(output = word, input = spoken_words) %>% anti_join(stop_words)## # A tibble: 511,869 x 16## id episode_id number raw_text timestamp_in_ms speaking_line character_id location_id## <dbl> <dbl> <dbl> <chr> <dbl> <lgl> <dbl> <dbl>## 1 9549 32 209 Miss Ho… 848000 TRUE 464 3## 2 9549 32 209 Miss Ho… 848000 TRUE 464 3## 3 9549 32 209 Miss Ho… 848000 TRUE 464 3## 4 9549 32 209 Miss Ho… 848000 TRUE 464 3## 5 9550 32 210 Lisa Si… 856000 TRUE 9 3## 6 9551 32 211 Miss Ho… 856000 TRUE 464 3## 7 9551 32 211 Miss Ho… 856000 TRUE 464 3## 8 9551 32 211 Miss Ho… 856000 TRUE 464 3## 9 9551 32 211 Miss Ho… 856000 TRUE 464 3## 10 9551 32 211 Miss Ho… 856000 TRUE 464 3## # … with 511,859 more rows, and 8 more variables: raw_character_text <chr>,## # raw_location_text <chr>, normalized_text <chr>, word_count <chr>, name <chr>,## # normalized_name <chr>, gender <chr>, word <chr>Simpsons Pre-process the text

sc %>% unnest_tokens(output = word, input = spoken_words) %>% anti_join(stop_words) %>% count(word, sort = TRUE) %>% filter(!is.na(word))## # A tibble: 41,891 x 2## word n## <chr> <int>## 1 hey 4366## 2 homer 4328## 3 bart 3434## 4 uh 3090## 5 yeah 2997## 6 simpson 2846## 7 marge 2786## 8 gonna 2639## 9 dad 2521## 10 time 2508## # … with 41,881 more rowsSimpsons Pre-process the text

sc_top_20 <- sc %>% unnest_tokens(output = word, input = spoken_words) %>% anti_join(stop_words) %>% count(word, sort = TRUE) %>% filter(!is.na(word)) %>% mutate(word = factor(word, levels = rev(unique(word)))) %>% top_n(20)Simpsons plot most common words

ggplot(sc_top_20, aes(x = word, y = n)) + geom_col() + labs(x = '', y = 'count', title = 'Top 20 words') + coord_flip() + theme_bw().right-plot[

Tag the words with sentiments

Using AFINN words will be tagged on a negative to positive scale of -1 to 5.

sc_word <- sc %>% unnest_tokens(output = word, input = spoken_words) %>% anti_join(stop_words) %>% count(name, word) %>% filter(!is.na(word))sc_word## # A tibble: 220,838 x 3## name word n## <chr> <chr> <int>## 1 '30s Reporter burns 1## 2 '30s Reporter kinda 1## 3 '30s Reporter sensational 1## 4 1-Year-Old Bart beer 1## 5 1-Year-Old Bart daddy 5## 6 1-Year-Old Bart fat 1## 7 1-Year-Old Bart moustache 1## 8 1-Year-Old Bart nice 1## 9 1-Year-Old Bart smell 1## 10 1-Year-Old Bart yell 1## # … with 220,828 more rowssc_s <- sc_word %>% inner_join(get_sentiments("afinn"), by = "word")sc_s## # A tibble: 26,688 x 4## name word n value## <chr> <chr> <int> <dbl>## 1 1-Year-Old Bart nice 1 3## 2 10-Year-Old Homer chance 1 2## 3 10-Year-Old Homer cool 1 1## 4 10-Year-Old Homer die 1 -3## 5 10-Year-Old Homer died 1 -3## 6 10-Year-Old Homer dreams 1 1## 7 10-Year-Old Homer happy 1 3## 8 10-Year-Old Homer heaven 1 2## 9 10-Year-Old Homer hell 1 -4## 10 10-Year-Old Homer kiss 1 2## # … with 26,678 more rowsExamine Simpsons characters

sc_s %>% group_by(name) %>% summarise(m = mean(value)) %>% arrange(desc(m))## # A tibble: 3,409 x 2## name m## <chr> <dbl>## 1 2nd Sportscaster 4## 2 4-h Judge 4## 3 7-Year-Old Brockman 4## 4 ALEPPO 4## 5 All Kids 4## 6 Applicants 4## 7 Australian 4## 8 Bill James 4## 9 Canadian Player 4## 10 Carl Kasell 4## # … with 3,399 more rowsExamine Simpsons characters: Focus on the main characters.

keep <- sc %>% count(name, sort=TRUE) %>% filter(!is.na(name)) %>% filter(n > 999)sc_s %>% filter(name %in% keep$name) %>% group_by(name) %>% summarise(m = mean(value)) %>% arrange(m)## # A tibble: 16 x 2## name m## <chr> <dbl>## 1 Nelson Muntz -0.519## 2 Grampa Simpson -0.429## 3 Homer Simpson -0.428## 4 Bart Simpson -0.391## 5 Chief Wiggum -0.388## 6 Lisa Simpson -0.388## 7 Marge Simpson -0.344## 8 Apu Nahasapeemapetilon -0.339## 9 Moe Szyslak -0.313## 10 C. Montgomery Burns -0.310## 11 Ned Flanders -0.265## 12 Milhouse Van Houten -0.244## 13 Krusty the Clown -0.218## 14 Seymour Skinner -0.194## 15 Waylon Smithers -0.167## 16 Lenny Leonard -0.154Your turn: Exercise 3

Bart Simpson is featured at various ages. How has the sentiment of his words changed over his life?

Repeat the sentiment analysis with the NRC lexicon. What character is the most "angry"? "joyful"?

(if time) Example: AFL Finals tweets

The rtweet package allows you to pull tweets from the archive. It gives only the last 6-9 days worth of data. You need to have a twitter account, and you need to create an app (its really basic) in order to pull twitter data. The instructions that come from this package (https://rtweet.info) are pretty simple to follow.

(if time) Example: AFL Finals tweets

Given that it is AFL final week, I thought it might be interesting to look at tweets that use the hashtag "#AFLFinals". Once you have a developer account, this is as simple as

afl <- search_tweets( "#AFLFinals", n = 20000, include_rts = FALSE)Here is the data collected in the previous year's AFL finals.

afl <- read_rds("data/afl_twitter_past.rds")afl## # A tibble: 9,900 x 88## user_id status_id created_at screen_name text source display_text_wi…## * <chr> <chr> <dttm> <chr> <chr> <chr> <dbl>## 1 124305… 10402046… 2018-09-13 11:45:00 JimWilsonTV @GWS… Twitt… 129## 2 124305… 10385921… 2018-09-09 00:57:25 JimWilsonTV No t… Twitt… 113## 3 124305… 10380010… 2018-09-07 09:48:37 JimWilsonTV Than… Twitt… 162## 4 124305… 10382372… 2018-09-08 01:27:11 JimWilsonTV Can’… Twitt… 139## 5 124305… 10391266… 2018-09-10 12:21:15 JimWilsonTV On y… Twitt… 163## 6 124305… 10383958… 2018-09-08 11:57:28 JimWilsonTV Grea… Twitt… 101## 7 124305… 10374607… 2018-09-05 22:01:31 JimWilsonTV @NTh… Twitt… 69## 8 124305… 10371973… 2018-09-05 04:34:47 JimWilsonTV 16 y… Twitt… 176## 9 330082… 10402039… 2018-09-13 11:41:57 AlexWard64 I've… Twitt… 137## 10 303429… 10402010… 2018-09-13 11:30:30 HAWKSHEROES I'm … Twitt… 119## # … with 9,890 more rows, and 81 more variables: reply_to_status_id <chr>,## # reply_to_user_id <chr>, reply_to_screen_name <chr>, is_quote <lgl>, is_retweet <lgl>,## # favorite_count <int>, retweet_count <int>, hashtags <list>, symbols <list>,## # urls_url <list>, urls_t.co <list>, urls_expanded_url <list>, media_url <list>,## # media_t.co <list>, media_expanded_url <list>, media_type <list>,## # ext_media_url <list>, ext_media_t.co <list>, ext_media_expanded_url <list>,## # ext_media_type <chr>, mentions_user_id <list>, mentions_screen_name <list>,## # lang <chr>, quoted_status_id <chr>, quoted_text <chr>, quoted_created_at <dttm>,## # quoted_source <chr>, quoted_favorite_count <int>, quoted_retweet_count <int>,## # quoted_user_id <chr>, quoted_screen_name <chr>, quoted_name <chr>,## # quoted_followers_count <int>, quoted_friends_count <int>,## # quoted_statuses_count <int>, quoted_location <chr>, quoted_description <chr>,## # quoted_verified <lgl>, retweet_status_id <chr>, retweet_text <chr>,## # retweet_created_at <dttm>, retweet_source <chr>, retweet_favorite_count <int>,## # retweet_retweet_count <int>, retweet_user_id <chr>, retweet_screen_name <chr>,## # retweet_name <chr>, retweet_followers_count <int>, retweet_friends_count <int>,## # retweet_statuses_count <int>, retweet_location <chr>, retweet_description <chr>,## # retweet_verified <lgl>, place_url <chr>, place_name <chr>, place_full_name <chr>,## # place_type <chr>, country <chr>, country_code <chr>, geo_coords <list>,## # coords_coords <list>, bbox_coords <list>, status_url <chr>, name <chr>,## # location <chr>, description <chr>, url <chr>, protected <lgl>, followers_count <int>,## # friends_count <int>, listed_count <int>, statuses_count <int>,## # favourites_count <int>, account_created_at <dttm>, verified <lgl>, profile_url <chr>,## # profile_expanded_url <chr>, account_lang <chr>, profile_banner_url <chr>,## # profile_background_url <chr>, profile_image_url <chr>Your turn

- When was the final played last year?

- What is the range of dates of this data?

- Who is the most frequent tweeter using this hashtag?

- Are there some days that have more tweets than others?

- Are there some hours of the day that are more common tweet times?

Your Turn: Sentiment analysis

We need to break text of each tweet into words, tag words with sentiments, and make a cumulative score for each tweet.

- Which tweeter is the most positive? negative?

- Is there a day that spirits were higher in the tweets? Or when tweets were more negative?

- Does the tweeter

aflratingshave a trend in positivity or negativity?

Share and share alike

This work is licensed under a Creative Commons Attribution 4.0 International License.