ETC1010: Data Modelling and Computing

Lecture 8A: Text analysis

Dr. Nicholas Tierney & Professor Di Cook

EBS, Monash U.

2019-09-19

recap

- linear models (are awesome)

- many models

Announcements

- Assignment 3

- Project

- Peer review marking open (soon!)

- No class on September 27 (AFL day)

Tidytext analysis

Why text analysis?

- To use the realtors text description to improve the Melbourne housing price model

- Determine the extent of public discontent with train stoppages in Melbourne

- The differences between Darwin's first edition of the Origin of the Species and the 6th edition

- Does the sentiment of posts on Newcastle Jets public facebook page reflect their win/los record?

Typical Process

- Read in text

- Pre-processing: remove punctuation signs, remove numbers, stop words, stem words

- Tokenise: words, sentences, ngrams, chapters

- Summarise

- model

Packages

In addition to tidyverse we will be using three other packages today

library(tidytext)library(genius)library(gutenbergr)Tidytext

Using tidy data principles can make many text mining tasks easier, more effective, and consistent with tools already in wide use.

Learn more at https://www.tidytextmining.com/, by Julia Silge and David Robinson.

What is tidy text?

text <- c("This will be an uncertain time for us my love", "I can hear the echo of your voice in my head", "Singing my love", "I can see your face there in my hands my love", "I have been blessed by your grace and care my love", "Singing my love")text## [1] "This will be an uncertain time for us my love" ## [2] "I can hear the echo of your voice in my head" ## [3] "Singing my love" ## [4] "I can see your face there in my hands my love" ## [5] "I have been blessed by your grace and care my love"## [6] "Singing my love"What is tidy text?

text_df <- tibble(line = seq_along(text), text = text)text_df## # A tibble: 6 x 2## line text ## <int> <chr> ## 1 1 This will be an uncertain time for us my love ## 2 2 I can hear the echo of your voice in my head ## 3 3 Singing my love ## 4 4 I can see your face there in my hands my love ## 5 5 I have been blessed by your grace and care my love## 6 6 Singing my loveWhat is tidy text?

text_df %>% unnest_tokens( output = word, input = text, token = "words" # default option )## # A tibble: 49 x 2## line word ## <int> <chr> ## 1 1 this ## 2 1 will ## 3 1 be ## 4 1 an ## 5 1 uncertain## 6 1 time ## 7 1 for ## 8 1 us ## 9 1 my ## 10 1 love ## # … with 39 more rowsWhat is unnesting?

text_df %>% unnest_tokens( output = word, input = text, token = "characters" )## # A tibble: 171 x 2## line word ## <int> <chr>## 1 1 t ## 2 1 h ## 3 1 i ## 4 1 s ## 5 1 w ## 6 1 i ## 7 1 l ## 8 1 l ## 9 1 b ## 10 1 e ## # … with 161 more rowsWhat is unnesting - ngrams length 2

text_df %>% unnest_tokens( output = word, input = text, token = "ngrams", n = 2 )## # A tibble: 43 x 2## line word ## <int> <chr> ## 1 1 this will ## 2 1 will be ## 3 1 be an ## 4 1 an uncertain ## 5 1 uncertain time## 6 1 time for ## 7 1 for us ## 8 1 us my ## 9 1 my love ## 10 2 i can ## # … with 33 more rowsWhat is unnesting - ngrams length 3

text_df %>% unnest_tokens( output = word, input = text, token = "ngrams", n = 3 )## # A tibble: 37 x 2## line word ## <int> <chr> ## 1 1 this will be ## 2 1 will be an ## 3 1 be an uncertain ## 4 1 an uncertain time ## 5 1 uncertain time for## 6 1 time for us ## 7 1 for us my ## 8 1 us my love ## 9 2 i can hear ## 10 2 can hear the ## # … with 27 more rowsAnalyzing lyrics of one artist

Let's get more data

We'll use the genius package to get song lyric data from Genius.

genius_album()allows you to download the lyrics for an entire album in a tidy format.

getting more data

Input: Two arguments:

artistsandalbum. If it gives you issues check that you have the album name and artists as specified on Genius.Output: A tidy data frame with three columns:

title: track nametrack_n: track numbertext: lyrics

Greatest Australian Album of all time (as voted by triple J)

od_num_five <- genius_album( artist = "Powderfinger", album = "Odyssey Number Five")od_num_five## # A tibble: 310 x 4## track_title track_n line lyric ## <chr> <int> <int> <chr> ## 1 Waiting For The Sun 1 1 This will be an uncertain time for us my love ## 2 Waiting For The Sun 1 2 I can hear the echo of your voice in my head ## 3 Waiting For The Sun 1 3 Singing my love ## 4 Waiting For The Sun 1 4 I can see your face there in my hands my love ## 5 Waiting For The Sun 1 5 I have been blessed by your grace and care my love ## 6 Waiting For The Sun 1 6 Singing my love ## 7 Waiting For The Sun 1 7 There's a place for us sitting here waiting for the …## 8 Waiting For The Sun 1 8 And it calls me back into the safe arms that I know ## 9 Waiting For The Sun 1 9 For every step you're further away from me my love ## 10 Waiting For The Sun 1 10 I grow more unsteady on my feet my love ## # … with 300 more rowsSave for later

powderfinger <- od_num_five %>% mutate( artist = "Powderfinger", album = "Odyssey Number Five" )powderfinger## # A tibble: 310 x 6## track_title track_n line lyric artist album ## <chr> <int> <int> <chr> <chr> <chr> ## 1 Waiting For The… 1 1 This will be an uncertain time f… Powderfi… Odyssey Num…## 2 Waiting For The… 1 2 I can hear the echo of your voic… Powderfi… Odyssey Num…## 3 Waiting For The… 1 3 Singing my love Powderfi… Odyssey Num…## 4 Waiting For The… 1 4 I can see your face there in my … Powderfi… Odyssey Num…## 5 Waiting For The… 1 5 I have been blessed by your grac… Powderfi… Odyssey Num…## 6 Waiting For The… 1 6 Singing my love Powderfi… Odyssey Num…## 7 Waiting For The… 1 7 There's a place for us sitting h… Powderfi… Odyssey Num…## 8 Waiting For The… 1 8 And it calls me back into the sa… Powderfi… Odyssey Num…## 9 Waiting For The… 1 9 For every step you're further aw… Powderfi… Odyssey Num…## 10 Waiting For The… 1 10 I grow more unsteady on my feet … Powderfi… Odyssey Num…## # … with 300 more rowsWhat songs are in the album?

powderfinger %>% distinct(track_title)## # A tibble: 11 x 1## track_title ## <chr> ## 1 Waiting For The Sun ## 2 My Happiness ## 3 The Metre ## 4 Like A Dog ## 5 Odyssey #5 ## 6 Up & Down & Back Again ## 7 My Kind Of Scene ## 8 These Days ## 9 We Should Be Together Now## 10 Thrilloilogy ## 11 Whatever Makes You HappyHow long are the lyrics in Powderfinger's songs?

powderfinger %>% count(track_title) %>% arrange(-n)## # A tibble: 11 x 2## track_title n## <chr> <int>## 1 Up & Down & Back Again 46## 2 My Happiness 40## 3 These Days 35## 4 Like A Dog 33## 5 Thrilloilogy 32## 6 My Kind Of Scene 31## 7 The Metre 30## 8 We Should Be Together Now 27## 9 Waiting For The Sun 21## 10 Whatever Makes You Happy 11## 11 Odyssey #5 4Tidy up the lyrics!

powderfinger_lyrics <- powderfinger %>% unnest_tokens(output = word, input = lyric)powderfinger_lyrics## # A tibble: 2,076 x 6## track_title track_n line artist album word ## <chr> <int> <int> <chr> <chr> <chr> ## 1 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five this ## 2 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five will ## 3 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five be ## 4 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five an ## 5 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five uncertain## 6 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five time ## 7 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five for ## 8 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five us ## 9 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five my ## 10 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five love ## # … with 2,066 more rowsWhat are the most common words?

powderfinger_lyrics %>% count(word) %>% arrange(-n)## # A tibble: 451 x 2## word n## <chr> <int>## 1 the 93## 2 you 77## 3 i 55## 4 and 54## 5 to 48## 6 it 42## 7 in 39## 8 a 38## 9 for 33## 10 me 32## # … with 441 more rowsStop words

In computing, stop words are words which are filtered out before or after processing of natural language data (text).

They usually refer to the most common words in a language, but there is not a single list of stop words used by all natural language processing tools.

English stop words

get_stopwords()## # A tibble: 175 x 2## word lexicon ## <chr> <chr> ## 1 i snowball## 2 me snowball## 3 my snowball## 4 myself snowball## 5 we snowball## 6 our snowball## 7 ours snowball## 8 ourselves snowball## 9 you snowball## 10 your snowball## # … with 165 more rowsSpanish stop words

get_stopwords(language = "es")## # A tibble: 308 x 2## word lexicon ## <chr> <chr> ## 1 de snowball## 2 la snowball## 3 que snowball## 4 el snowball## 5 en snowball## 6 y snowball## 7 a snowball## 8 los snowball## 9 del snowball## 10 se snowball## # … with 298 more rowsVarious lexicons

See ?get_stopwords for more info.

get_stopwords(source = "smart")## # A tibble: 571 x 2## word lexicon## <chr> <chr> ## 1 a smart ## 2 a's smart ## 3 able smart ## 4 about smart ## 5 above smart ## 6 according smart ## 7 accordingly smart ## 8 across smart ## 9 actually smart ## 10 after smart ## # … with 561 more rowsWhat are the most common words?

powderfinger_lyrics## # A tibble: 2,076 x 6## track_title track_n line artist album word ## <chr> <int> <int> <chr> <chr> <chr> ## 1 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five this ## 2 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five will ## 3 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five be ## 4 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five an ## 5 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five uncertain## 6 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five time ## 7 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five for ## 8 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five us ## 9 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five my ## 10 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five love ## # … with 2,066 more rowsWhat are the most common words?

stopwords_smart <- get_stopwords(source = "smart")powderfinger_lyrics %>% anti_join(stopwords_smart)## # A tibble: 649 x 6## track_title track_n line artist album word ## <chr> <int> <int> <chr> <chr> <chr> ## 1 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five uncertain## 2 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five time ## 3 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five love ## 4 Waiting For The Sun 1 2 Powderfinger Odyssey Number Five hear ## 5 Waiting For The Sun 1 2 Powderfinger Odyssey Number Five echo ## 6 Waiting For The Sun 1 2 Powderfinger Odyssey Number Five voice ## 7 Waiting For The Sun 1 2 Powderfinger Odyssey Number Five head ## 8 Waiting For The Sun 1 3 Powderfinger Odyssey Number Five singing ## 9 Waiting For The Sun 1 3 Powderfinger Odyssey Number Five love ## 10 Waiting For The Sun 1 4 Powderfinger Odyssey Number Five face ## # … with 639 more rowsWhat are the most common words?

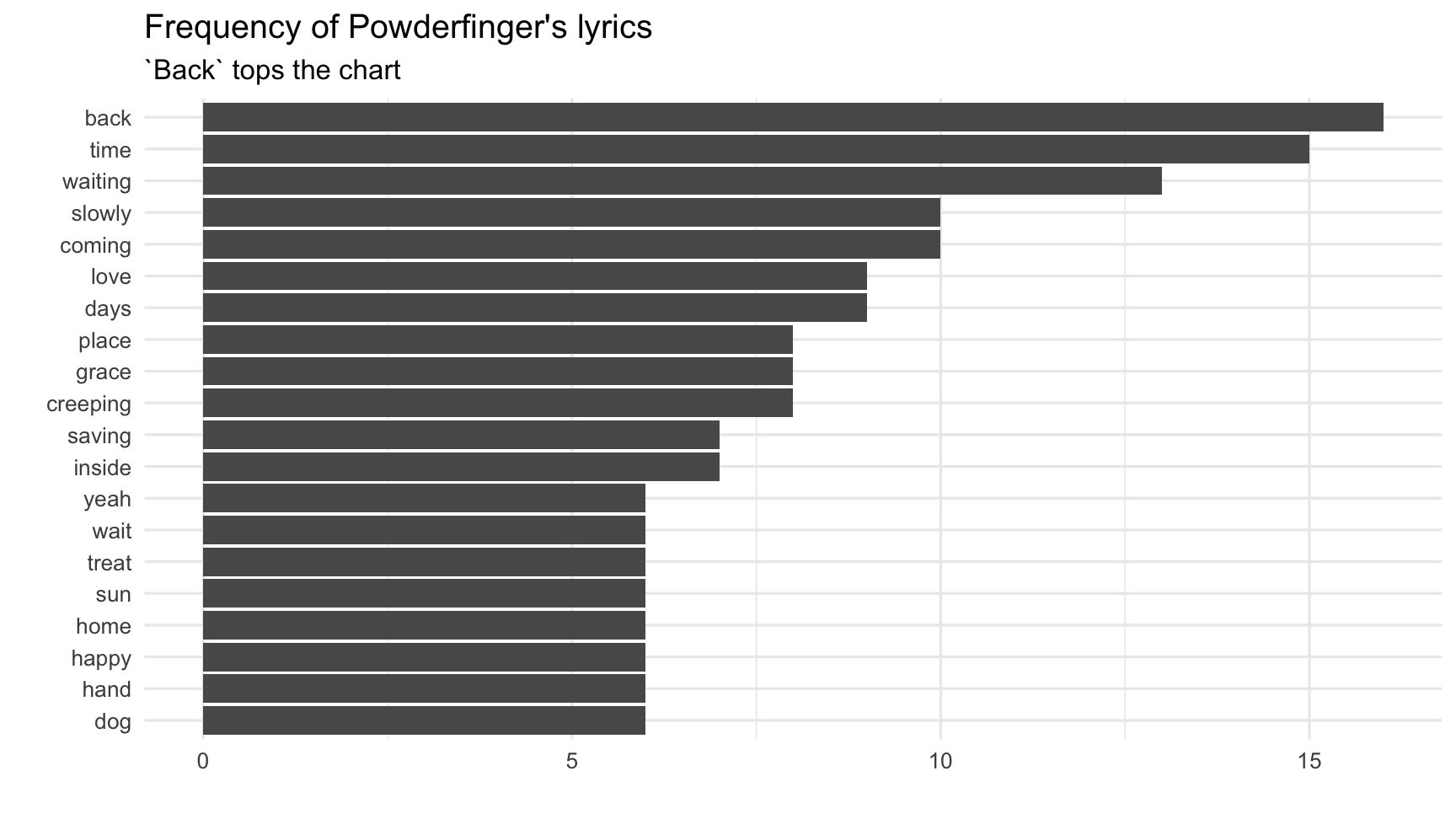

powderfinger_lyrics %>% anti_join(stopwords_smart) %>% count(word) %>% arrange(-n)## # A tibble: 287 x 2## word n## <chr> <int>## 1 back 16## 2 time 15## 3 waiting 13## 4 coming 10## 5 slowly 10## 6 days 9## 7 love 9## 8 creeping 8## 9 grace 8## 10 place 8## # … with 277 more rowsWhat are the most common words?

powderfinger_lyrics %>% anti_join(stopwords_smart) %>% count(word) %>% arrange(-n) %>% top_n(20) %>% ggplot(aes(fct_reorder(word, n), n)) + geom_col() + coord_flip() + theme_minimal() + labs(title = "Frequency of Powderfinger's lyrics", subtitle = "`Back` tops the chart", y = "", x = "")

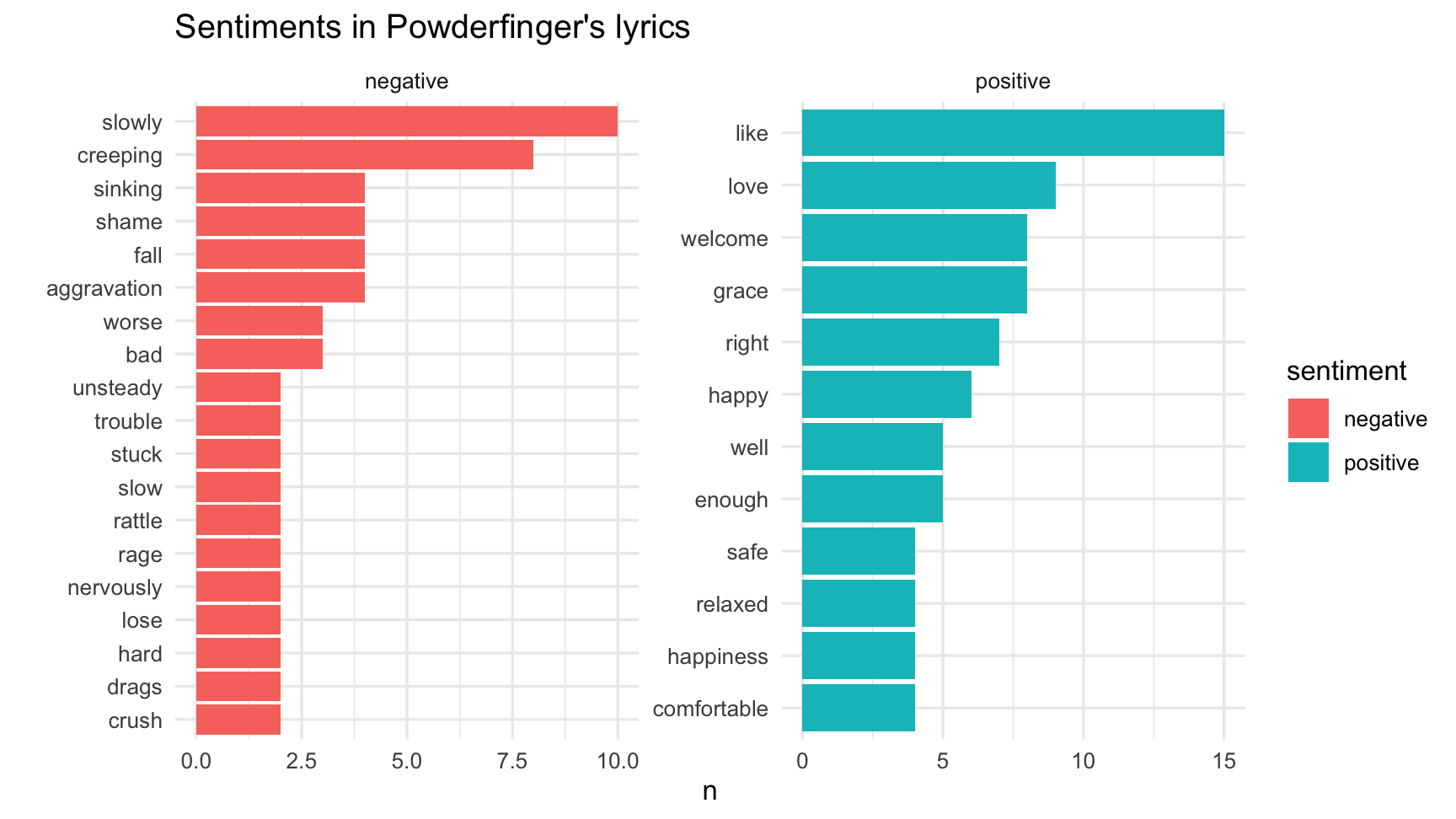

Sentiment analysis

One way to analyze the sentiment of a text is to consider the text as a combination of its individual words

and the sentiment content of the whole text as the sum of the sentiment content of the individual words

Sentiment lexicons

get_sentiments("afinn")## # A tibble: 2,477 x 2## word value## <chr> <dbl>## 1 abandon -2## 2 abandoned -2## 3 abandons -2## 4 abducted -2## 5 abduction -2## 6 abductions -2## 7 abhor -3## 8 abhorred -3## 9 abhorrent -3## 10 abhors -3## # … with 2,467 more rowsget_sentiments("bing")## # A tibble: 6,786 x 2## word sentiment## <chr> <chr> ## 1 2-faces negative ## 2 abnormal negative ## 3 abolish negative ## 4 abominable negative ## 5 abominably negative ## 6 abominate negative ## 7 abomination negative ## 8 abort negative ## 9 aborted negative ## 10 aborts negative ## # … with 6,776 more rowsSentiment lexicons

get_sentiments(lexicon = "bing")## # A tibble: 6,786 x 2## word sentiment## <chr> <chr> ## 1 2-faces negative ## 2 abnormal negative ## 3 abolish negative ## 4 abominable negative ## 5 abominably negative ## 6 abominate negative ## 7 abomination negative ## 8 abort negative ## 9 aborted negative ## 10 aborts negative ## # … with 6,776 more rowsget_sentiments(lexicon = "loughran")## # A tibble: 4,150 x 2## word sentiment## <chr> <chr> ## 1 abandon negative ## 2 abandoned negative ## 3 abandoning negative ## 4 abandonment negative ## 5 abandonments negative ## 6 abandons negative ## 7 abdicated negative ## 8 abdicates negative ## 9 abdicating negative ## 10 abdication negative ## # … with 4,140 more rowsSentiments in Powderfinger's lyrics

sentiments_bing <- get_sentiments("bing")powderfinger_lyrics %>% inner_join(sentiments_bing) %>% count(sentiment, word) %>% arrange(-n)## # A tibble: 70 x 3## sentiment word n## <chr> <chr> <int>## 1 positive like 15## 2 negative slowly 10## 3 positive love 9## 4 negative creeping 8## 5 positive grace 8## 6 positive welcome 8## 7 positive right 7## 8 positive happy 6## 9 positive enough 5## 10 positive well 5## # … with 60 more rowsVisualising sentiments

Visualising sentiments

powderfinger_lyrics %>% inner_join(sentiments_bing) %>% count(sentiment, word) %>% arrange(desc(n)) %>% group_by(sentiment) %>% top_n(10) %>% ungroup() %>% ggplot(aes(fct_reorder(word, n), n, fill = sentiment)) + geom_col() + coord_flip() + facet_wrap(~sentiment, scales = "free") + theme_minimal() + labs( title = "Sentiments in Powderfinger's lyrics", x = "" )Comparing lyrics across artists

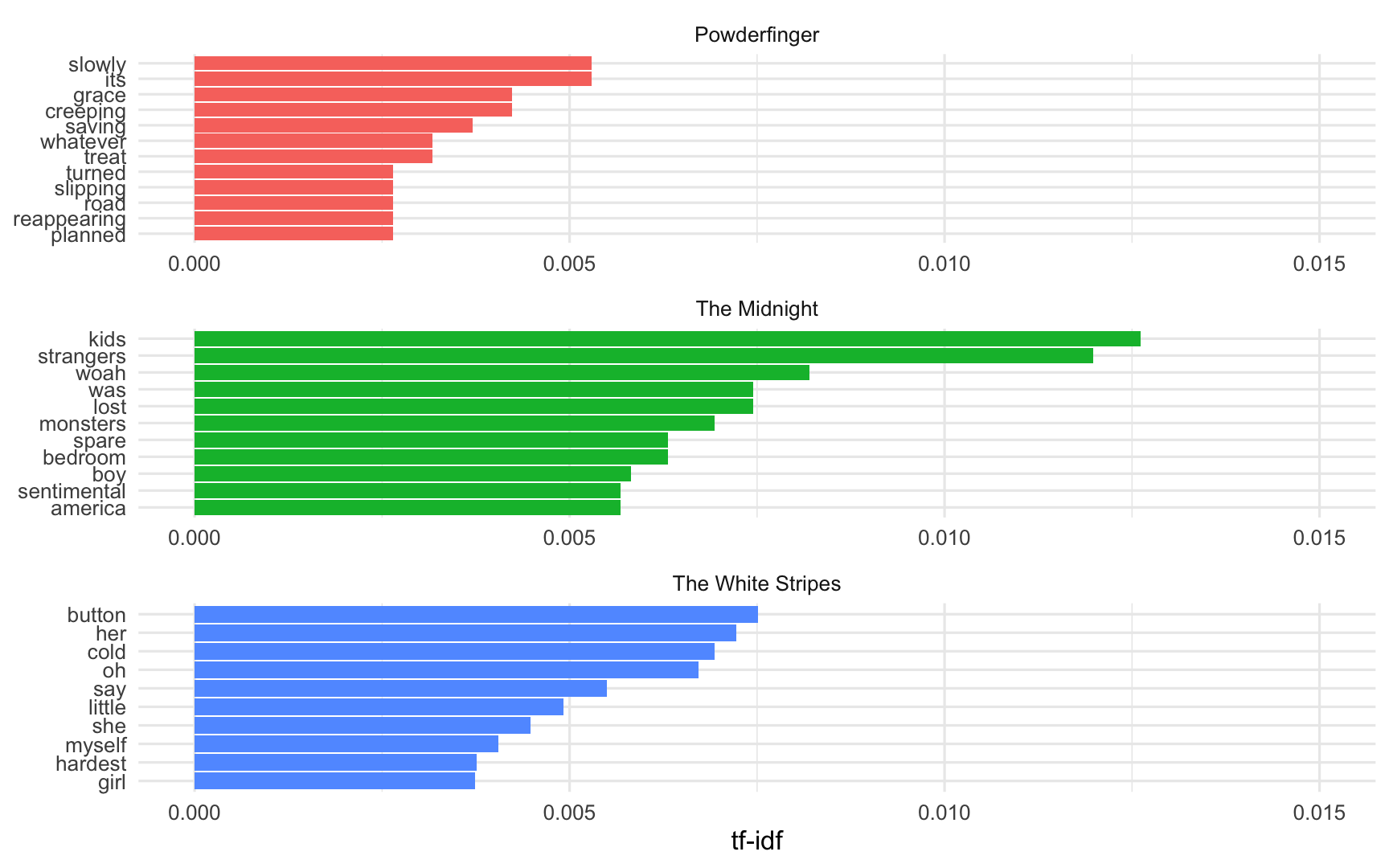

Get more data: The White Stripes & The Midnight

stripes <- genius_album(artist = "The White Stripes", album = "Elephant") %>% mutate(artist = "The White Stripes", album = "Elephant")midnight <- genius_album(artist = "The Midnight", album = "Kids") %>% mutate(artist = "The Midnight", album = "Kids")Combine data:

ldoc <- bind_rows(powderfinger, stripes, midnight)ldoc## # A tibble: 1,047 x 6## track_title track_n line lyric artist album ## <chr> <int> <int> <chr> <chr> <chr> ## 1 Waiting For The… 1 1 This will be an uncertain time f… Powderfi… Odyssey Num…## 2 Waiting For The… 1 2 I can hear the echo of your voic… Powderfi… Odyssey Num…## 3 Waiting For The… 1 3 Singing my love Powderfi… Odyssey Num…## 4 Waiting For The… 1 4 I can see your face there in my … Powderfi… Odyssey Num…## 5 Waiting For The… 1 5 I have been blessed by your grac… Powderfi… Odyssey Num…## 6 Waiting For The… 1 6 Singing my love Powderfi… Odyssey Num…## 7 Waiting For The… 1 7 There's a place for us sitting h… Powderfi… Odyssey Num…## 8 Waiting For The… 1 8 And it calls me back into the sa… Powderfi… Odyssey Num…## 9 Waiting For The… 1 9 For every step you're further aw… Powderfi… Odyssey Num…## 10 Waiting For The… 1 10 I grow more unsteady on my feet … Powderfi… Odyssey Num…## # … with 1,037 more rowsLDOC lyrics

ldoc_lyrics <- ldoc %>% unnest_tokens(word, lyric)ldoc_lyrics## # A tibble: 7,618 x 6## track_title track_n line artist album word ## <chr> <int> <int> <chr> <chr> <chr> ## 1 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five this ## 2 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five will ## 3 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five be ## 4 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five an ## 5 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five uncertain## 6 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five time ## 7 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five for ## 8 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five us ## 9 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five my ## 10 Waiting For The Sun 1 1 Powderfinger Odyssey Number Five love ## # … with 7,608 more rowsCommon LDOC lyrics - Without stop words:

ldoc_lyrics %>% anti_join(stopwords_smart) %>% count(artist, word, sort = TRUE) # alternative way to sort## # A tibble: 1,082 x 3## artist word n## <chr> <chr> <int>## 1 The White Stripes girl 35## 2 The White Stripes home 33## 3 The Midnight lost 32## 4 The White Stripes button 26## 5 The White Stripes love 26## 6 The Midnight boy 25## 7 The White Stripes cold 24## 8 The Midnight kids 20## 9 The White Stripes good 20## 10 The Midnight strangers 19## # … with 1,072 more rowsCommon LDOC lyrics - With stop words:

ldoc_lyrics_counts <- ldoc_lyrics %>% count(artist, word, sort = TRUE)ldoc_lyrics_counts## # A tibble: 1,619 x 3## artist word n## <chr> <chr> <int>## 1 The White Stripes to 139## 2 The White Stripes the 124## 3 The White Stripes i 120## 4 The White Stripes you 116## 5 The Midnight the 108## 6 The White Stripes do 102## 7 Powderfinger the 93## 8 The White Stripes a 89## 9 The White Stripes and 82## 10 Powderfinger you 77## # … with 1,609 more rowsWhat is a document about?

- Term frequency

- Inverse document frequency

idf(term)=ln(ndocumentsndocuments containing term)

tf-idf is about comparing documents within a collection.

Calculating tf-idf: Perhaps not that exciting... What's the issue?

ldoc_words <- ldoc_lyrics_counts %>% bind_tf_idf(term = word, document = artist, n = n)ldoc_words## # A tibble: 1,619 x 6## artist word n tf idf tf_idf## <chr> <chr> <int> <dbl> <dbl> <dbl>## 1 The White Stripes to 139 0.0366 0 0## 2 The White Stripes the 124 0.0326 0 0## 3 The White Stripes i 120 0.0316 0 0## 4 The White Stripes you 116 0.0305 0 0## 5 The Midnight the 108 0.0620 0 0## 6 The White Stripes do 102 0.0268 0 0## 7 Powderfinger the 93 0.0448 0 0## 8 The White Stripes a 89 0.0234 0 0## 9 The White Stripes and 82 0.0216 0 0## 10 Powderfinger you 77 0.0371 0 0## # … with 1,609 more rowsRe-calculating tf-idf

ldoc_words %>% bind_tf_idf(term = word, document = artist, n = n) %>% arrange(-tf_idf)## # A tibble: 1,619 x 6## artist word n tf idf tf_idf## <chr> <chr> <int> <dbl> <dbl> <dbl>## 1 The Midnight kids 20 0.0115 1.10 0.0126 ## 2 The Midnight strangers 19 0.0109 1.10 0.0120 ## 3 The Midnight woah 13 0.00746 1.10 0.00820## 4 The White Stripes button 26 0.00684 1.10 0.00752## 5 The Midnight lost 32 0.0184 0.405 0.00745## 6 The Midnight was 32 0.0184 0.405 0.00745## 7 The White Stripes her 25 0.00658 1.10 0.00723## 8 The White Stripes cold 24 0.00632 1.10 0.00694## 9 The Midnight monsters 11 0.00631 1.10 0.00694## 10 The White Stripes oh 63 0.0166 0.405 0.00672## # … with 1,609 more rows

Your Turn:

- go to rstudio.cloud and repeat the analyses done in the lecture for your own artists.

- some suggestions:

- Daft Punk's "Discovery" or "Random Access Memories"

- Musicals: "Les Miserables" or "Wicked"

Part Two: Tidy Text analysis on Books

Getting some books to study

The Gutenberg project provides the text of over 57000 books free online.

Let's explore The Origin of the Species by Charles Darwin using the gutenbergr R package.

We need to know the id of the book, which means looking this up online anyway.

- The first edition is

1228 - The sixth edition is

2009

Packages used

- We need the

tmpackage to remove numbers from the page, andgutenbergrto access the books.

# The tm package is needed because the book has numbers# in the text, that need to be removed, and the# install.packages("tm")library(tm)library(gutenbergr)Download darwin

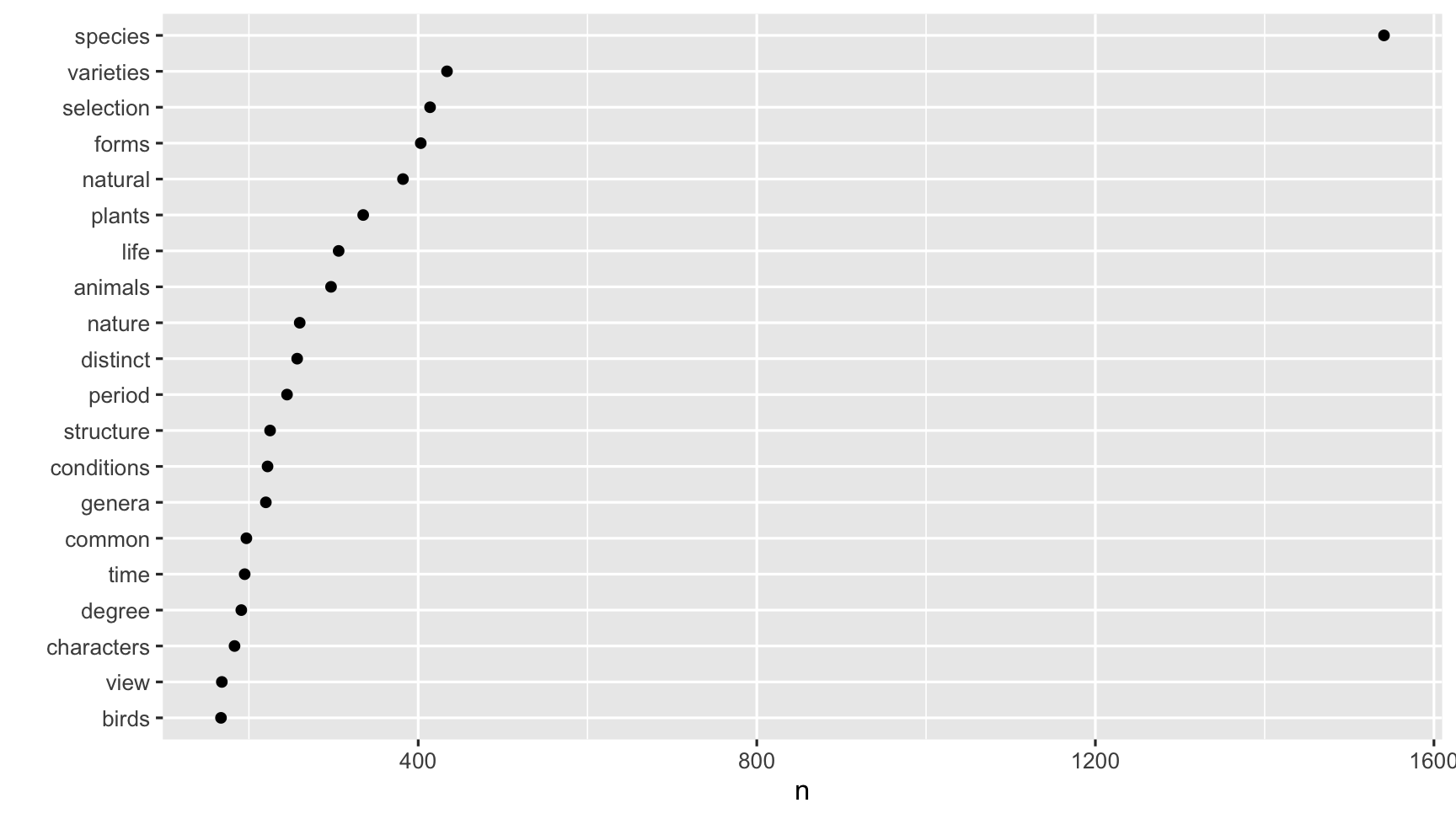

darwin1 <- gutenberg_download(1228)darwin1## # A tibble: 15,819 x 2## gutenberg_id text ## <int> <chr> ## 1 1228 ON THE ORIGIN OF SPECIES. ## 2 1228 "" ## 3 1228 OR THE PRESERVATION OF FAVOURED RACES IN THE STRUGGLE FOR LIFE. ## 4 1228 "" ## 5 1228 "" ## 6 1228 By Charles Darwin, M.A., ## 7 1228 "" ## 8 1228 Fellow Of The Royal, Geological, Linnaean, Etc., Societies; ## 9 1228 "" ## 10 1228 Author Of 'Journal Of Researches During H.M.S. Beagle's Voyage Round The## # … with 15,809 more rows# remove the numbers from the textdarwin1$text <- removeNumbers(darwin1$text)Tokenize

- break into one word per line

- remove the stop words

- count the words

- find the length of the words

darwin1_words <- darwin1 %>% unnest_tokens(word, text) %>% anti_join(stop_words) %>% count(word, sort = TRUE) %>% mutate(len = str_length(word))darwin1_words## # A tibble: 6,395 x 3## word n len## <chr> <int> <int>## 1 species 1541 7## 2 varieties 434 9## 3 selection 414 9## 4 forms 403 5## 5 natural 382 7## 6 plants 335 6## 7 life 306 4## 8 animals 297 7## 9 nature 260 6## 10 distinct 257 8## # … with 6,385 more rowsAnalyse tokens

quantile( darwin1_words$n, probs = seq(0.9, 1, 0.01))## 90% 91% 92% 93% 94% 95% 96% 97% 98% 99% 100% ## 19.00 21.00 24.00 26.00 31.00 36.00 43.00 52.00 66.00 101.06 1541.00Analyse tokens

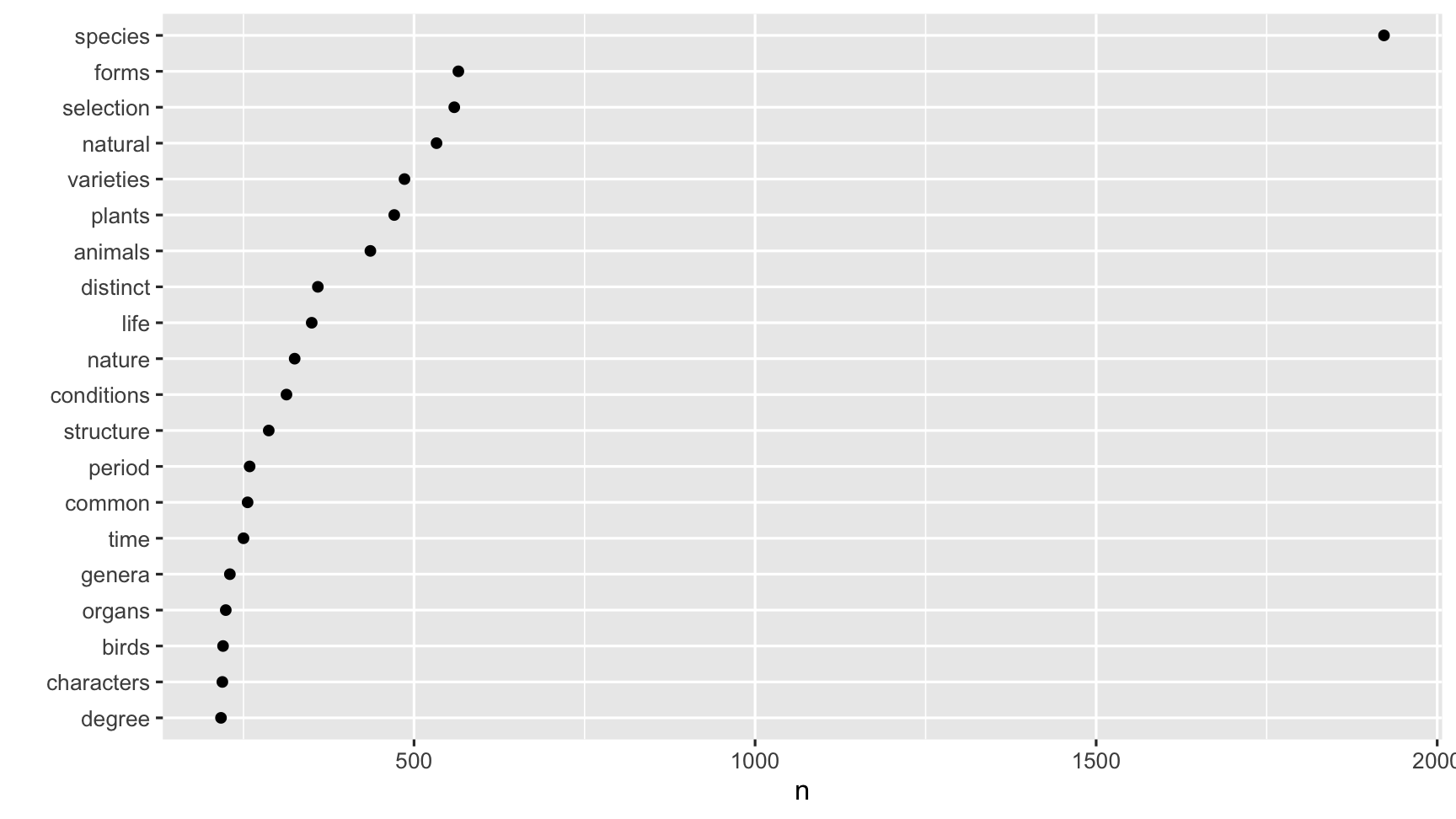

darwin1_words %>% top_n(n = 20, wt = n) %>% ggplot(aes(x = n, y = fct_reorder(word, n))) + geom_point() + ylab("")

Download and tokenize the 6th edition.

darwin6 <- gutenberg_download(2009)darwin6$text <- removeNumbers(darwin6$text)show tokenized words

darwin6_words <- darwin6 %>% unnest_tokens(word, text) %>% anti_join(stop_words) %>% count(word, sort = TRUE) %>% mutate(len = str_length(word))darwin6_words## # A tibble: 8,335 x 3## word n len## <chr> <int> <int>## 1 species 1922 7## 2 forms 565 5## 3 selection 559 9## 4 natural 533 7## 5 varieties 486 9## 6 plants 471 6## 7 animals 436 7## 8 distinct 359 8## 9 life 350 4## 10 nature 325 6## # … with 8,325 more rowsquantile(darwin6_words$n, probs = seq(0.9, 1, 0.01))## 90% 91% 92% 93% 94% 95% 96% 97% 98% 99% 100% ## 20.00 22.00 25.00 28.00 32.00 38.00 45.00 57.00 75.00 109.66 1922.00darwin6_words %>% top_n(n = 20, wt = n) %>% ggplot(aes(x = n, y = fct_reorder(word, n))) + geom_point() + ylab("")

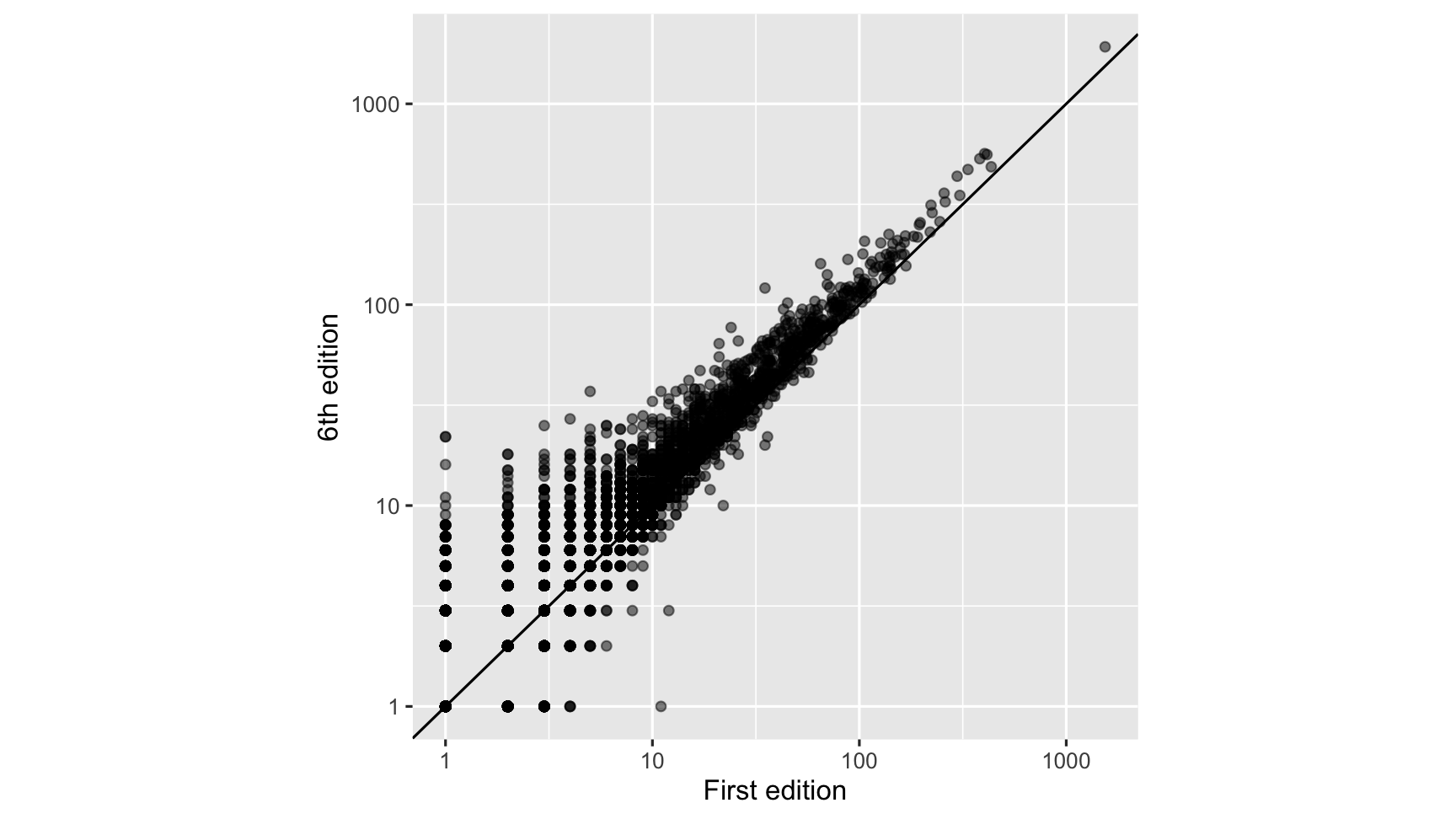

Compare the word frequency

darwin <- full_join( darwin1_words, darwin6_words, by = "word" ) %>% rename( n_ed1 = n.x, len_ed1 = len.x, n_ed6 = n.y, len_ed6 = len.y )darwin## # A tibble: 8,604 x 5## word n_ed1 len_ed1 n_ed6 len_ed6## <chr> <int> <int> <int> <int>## 1 species 1541 7 1922 7## 2 varieties 434 9 486 9## 3 selection 414 9 559 9## 4 forms 403 5 565 5## 5 natural 382 7 533 7## 6 plants 335 6 471 6## 7 life 306 4 350 4## 8 animals 297 7 436 7## 9 nature 260 6 325 6## 10 distinct 257 8 359 8## # … with 8,594 more rowsplot the word frequency

ggplot(darwin, aes(x = n_ed1, y = n_ed6, label = word)) + geom_abline(intercept = 0, slope = 1) + geom_point(alpha = 0.5) + xlab("First edition") + ylab("6th edition") + scale_x_log10() + scale_y_log10() + theme(aspect.ratio = 1)

Your turn:

- Does it look like the 6th edition was an expanded version of the first?

- What word is most frequent in both editions?

- Find some words that are not in the first edition but appear in the 6th.

- Find some words that are used the first edition but not in the 6th.

- Using a linear regression model find the top few words that appear more often than expected, based on the frequency in the first edition. Find the top few words that appear less often than expected.

Book comparison

Idea: Find the important words for the content of each document by decreasing the weight of commonly used words and increasing the weight for words that are not used very much in a collection or corpus of documents.

Term frequency, inverse document frequency (tf_idf).

Helps measure word importance of a document in a collection of documents.

E.g, A novel in a collection of novels, a website in a collection of websites.

tfword=Number of times word t appears in a documentTotal number of words in the document

idfword=lognumber of documentsnumber of documents word appears in

tfidf=tf×idf

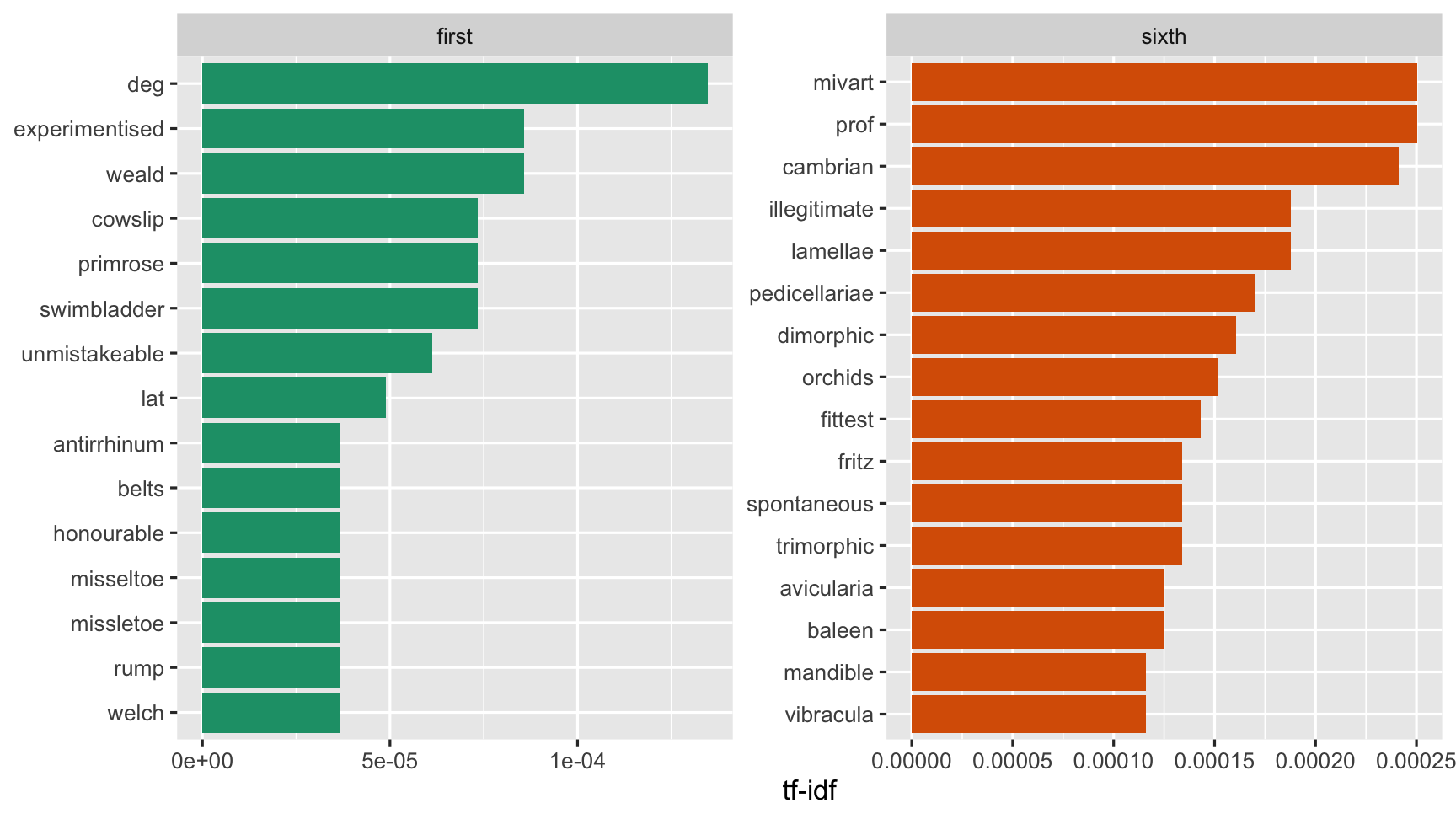

darwin <- bind_rows("first" = darwin1_words, "sixth" = darwin6_words, .id = "edition")darwin## # A tibble: 14,730 x 4## edition word n len## <chr> <chr> <int> <int>## 1 first species 1541 7## 2 first varieties 434 9## 3 first selection 414 9## 4 first forms 403 5## 5 first natural 382 7## 6 first plants 335 6## 7 first life 306 4## 8 first animals 297 7## 9 first nature 260 6## 10 first distinct 257 8## # … with 14,720 more rowsTF IDF

darwin_tf_idf <- darwin %>% bind_tf_idf(word, edition, n)darwin_tf_idf %>% arrange(desc(tf_idf))## # A tibble: 14,730 x 7## edition word n len tf idf tf_idf## <chr> <chr> <int> <int> <dbl> <dbl> <dbl>## 1 sixth mivart 28 6 0.000361 0.693 0.000250## 2 sixth prof 28 4 0.000361 0.693 0.000250## 3 sixth cambrian 27 8 0.000348 0.693 0.000241## 4 sixth illegitimate 21 12 0.000271 0.693 0.000188## 5 sixth lamellae 21 8 0.000271 0.693 0.000188## 6 sixth pedicellariae 19 13 0.000245 0.693 0.000170## 7 sixth dimorphic 18 9 0.000232 0.693 0.000161## 8 sixth orchids 17 7 0.000219 0.693 0.000152## 9 sixth fittest 16 7 0.000206 0.693 0.000143## 10 first deg 11 3 0.000194 0.693 0.000135## # … with 14,720 more rowsgg_darwin_1_vs_6 <-darwin_tf_idf %>% arrange(desc(tf_idf)) %>% mutate(word = factor(word, levels = rev(unique(word)))) %>% group_by(edition) %>% top_n(15) %>% ungroup() %>% ggplot(aes(x = word, y = tf_idf, fill = edition)) + geom_col(show.legend = FALSE) + labs(x = NULL, y = "tf-idf") + facet_wrap(~edition, ncol = 2, scales = "free") + coord_flip() + scale_fill_brewer(palette = "Dark2")

What do we learn?

- Mr Mivart appears in the 6th edition, multiple times

str_which(darwin6$text, "Mivart")## [1] 5435 6973 7982 7987 7990 7998 8003 8011 8026 8059 8081 8194 8204 8229## [15] 8234 8268 8413 8466 8506 8532 8575 8581 8603 8612 8677 8684 8759 9001## [29] 9014 9025 9055 9063 9125 16240 20456darwin6[5435, ]## # A tibble: 1 x 2## gutenberg_id text ## <int> <chr> ## 1 2009 to this rule, as Mr. Mivart has remarked, that it has little value.What do we learn?

- Prof title is used more often in the 6th edition

- There is a tendency for latin names

- Mistletoe was mispelled in the 1st edition

Lab exercise

Text Mining with R has an example comparing historical physics textbooks: Discourse on Floating Bodies by Galileo Galilei, Treatise on Light by Christiaan Huygens, Experiments with Alternate Currents of High Potential and High Frequency by Nikola Tesla, and Relativity: The Special and General Theory by Albert Einstein. All are available on the Gutenberg project.

Work your way through the comparison of physics books. It is section 3.4.

Thanks

- Dr. Mine Çetinkaya-Rundel

- Dr. Julia Silge: https://github.com/juliasilge/tidytext-tutorial

- Dr. Julia Silge and Dr. David Robinson: https://www.tidytextmining.com/

- Josiah Parry: https://github.com/JosiahParry/genius